Introduction:

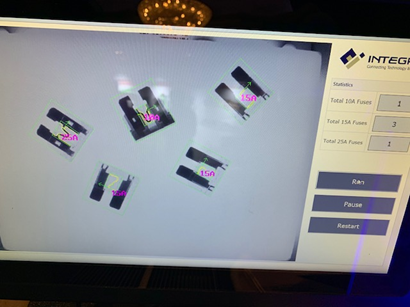

In today’s fast-paced technology industry, companies are constantly seeking ways to optimize their systems and improve efficiency. Integrys, a leading engineering firm, recently embarked on a challenging project to help a client enhance the performance of their existing servers without the need for a complete computing system overhaul. With their expertise in needs assessment, design solutions, custom configuration, test and validation, and post-application support, Integrys proved once again why they are at the forefront of innovation.

The challenges in custom design and engineering:

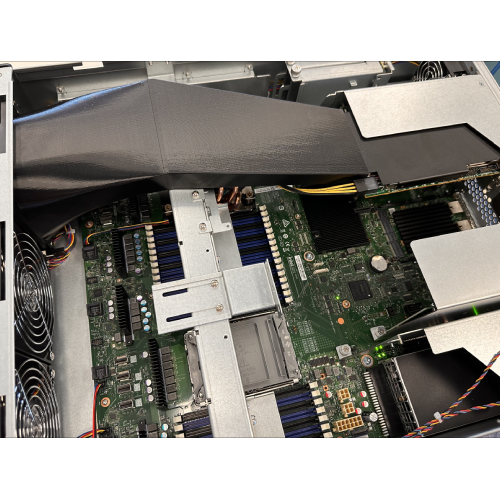

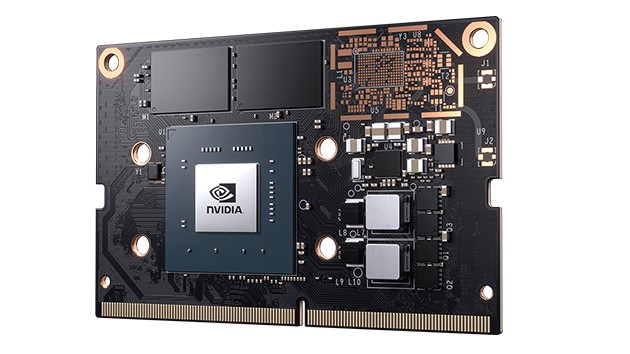

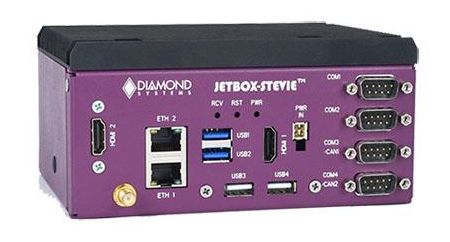

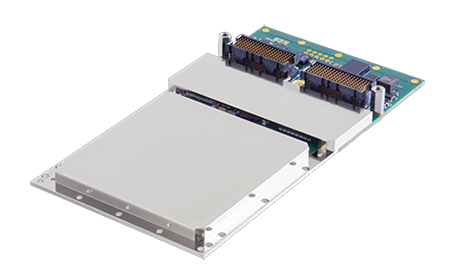

The client’s servers, when operating at full capacity, generated a significant amount of heat due to two main components: two powerful GPUs and an accelerated Network Interface Card from Intel. The excessive heat threatened the stability and longevity of the electronics within the chassis, necessitating a creative solution. Integrys faced several critical challenges in this project:

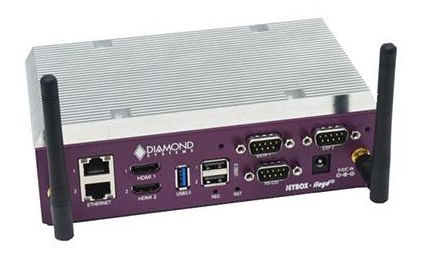

- Preserving the Existing Architecture: The project required finding a solution without redesigning the already-built chassis and motherboard and maintaining the positioning of the GPUs and Network Card.

- Cooling Multiple Heat Sources: Integrys needed to devise a method to bring in cool air from the environment and direct it to three different locations within the system to effectively cool the GPUs and Network Card.

- Precise Airflow Requirements: The engineering team had to ensure specific flow rates and temperatures were achieved across the pre-existing architecture, which posed further complexities.

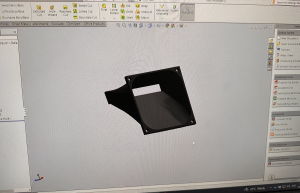

- Seamless Integration: The most demanding mechanical challenge was developing a duct that could be easily inserted into the pre-built chassis without the need for dismantling the entire system.

The Custom Solution:

Led by Electro-Mechanical Designer, Daniyal Jafri, and under the guidance of Engineering Manager, Eric Buckley, the engineering team at Integrys combined their expertise in fluid mechanics, thermal science, and mechanical design to tackle these challenges head-on. By applying scientific principles and innovative thinking, they devised an ingenious solution that achieved the desired flow rates and temperatures while seamlessly integrating into the existing infrastructure.

The team’s initial design iterations involved a compact 80mm duct, which was 3D printed due to the complexity of its geometry. While it met the flow rate requirements, it exceeded the acceptable acoustic noise levels. To address this issue, the team explored a larger 120mm fan, which provided a 4x increase in airflow at the same RPM. Running the 120mm fan at a lower speed to provide that same airflow as the 80mm solution reduced noise levels while still meeting the necessary cooling requirements.

Overcoming the size constraints imposed by the larger fan, the final duct design from Integrys successfully met all the client’s requirements. The innovative design allowed the duct to be seamlessly slid into the system, eliminating the need to remove metal panels within the chassis for assembly. This breakthrough significantly reduced assembly time, saving the client substantial labour costs.

Conclusion:

Integrys has once again showcased its ability to connect technology and innovation. By leveraging their engineering expertise, the team at Integrys devised a ground-breaking solution to enhance the efficiency of the client’s servers. Overcoming the challenges of preserving existing architecture, cooling multiple heat sources, achieving precise airflow requirements, and seamless integration, Integrys delivered a state-of-the-art 3U server solution that exceeded expectations.

Integrys’s dedication and expertise enabled the client to optimize their system without requiring a complete redesign, saving both time and resources. This successful collaboration exemplifies Integrys’ commitment to delivering cutting-edge engineering solutions and reaffirms its position as an industry leader.

Click here to view more engineering services from Integrys or Contact Us for your personalized engineering solution.