Image processing is the use of algorithms and mathematical models to process and analyze digital images. The goal of image processing is to enhance the quality of images, extract meaningful information from them, and automate image-based tasks. Image processing is important in many areas, like computer vision, medical imaging, and multimedia. This article discusses important areas in image processing and mentions Zebra Technologies’ Aurora Design Assistant software.

Understanding Deep Learning (DL) and Its Functioning:

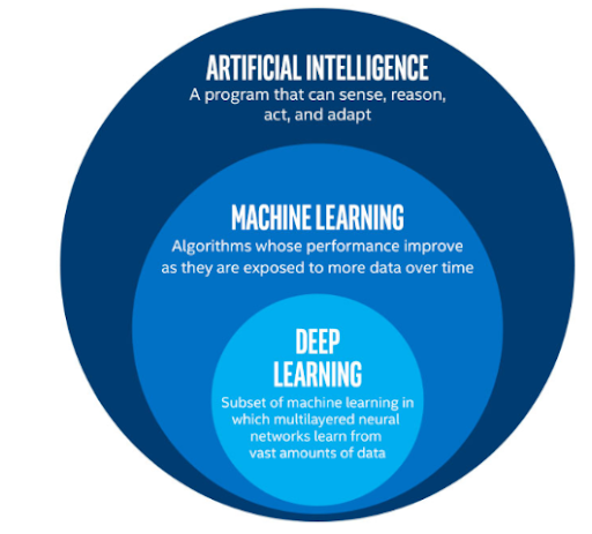

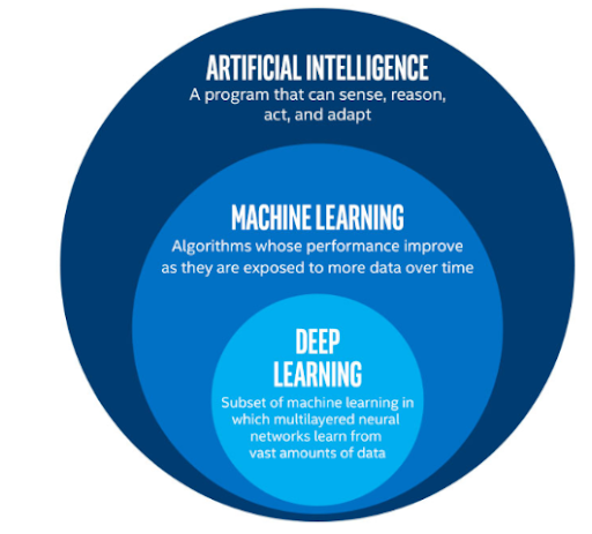

The fields of image processing and Deep Learning (DL) are complementary, especially in the context of computer vision and machine learning tasks. DL is a subset of machine learning, which is a subset of artificial intelligence (AI). DL algorithms are designed to reach similar conclusions as humans would by constantly analyzing data with a given logical structure. To achieve this, DL uses a multi-layered structure of algorithms called neural networks.

The design of the neural network relies on the structure of the human brain. Just as we use our brains to identify patterns and classify different types of information, we can teach neural networks to perform the same tasks on data. DL has succeeded in AI applications, advancing technology and contributing to breakthroughs in computer vision, language understanding, and reinforcement learning.

Widely used deep learning applications:

DL has applications in a vast array of fields, including:

• Image recognition and speech recognition: DL is excellent at image classification, object detection, and facial recognition. It is used for tagging images, recognizing faces for security, and converting speech to text.

• Healthcare: DL is used for medical image analysis, disease diagnosis, and prognosis prediction. It aids in identifying patterns in medical images, such as detecting tumors in radiology scans.

• Autonomous vehicles: DL plays a key role in developing self-driving cars. It uses live data from sensors, cameras, and other sources to decide on steering, braking, and acceleration.

• Manufacturing and industry: DL is applied to predictive maintenance, quality control, and process optimization in manufacturing. It detects defects in products and predicts equipment failures using vision computers.

• Robotics: DL enables robots to perceive and respond to their environments, helping them perform complex tasks.

Deep Learning applications in computer vision:

Computer vision is a part of AI that helps computers analyze and process digital images. It uses algorithms and techniques to make decisions or suggestions based on the images. DL has made significant contributions to computer vision, including:

• Image classification: Categorization of images into predefined classes, fundamental to applications such as object recognition.

• Object detection: Detection of objects within images by providing bounding boxes around them, crucial where it’s necessary to identify and locate multiple objects in a single image, such as in autonomous vehicles or surveillance systems.

• Facial recognition: Key to identity verification, access control, and security. We can accurately identify and match facial features against a database of known faces.

• Image segmentation: Segmentation of images into meaningful regions or objects, valuable in medical imaging for identifying and isolating specific structures within images.

• Medical image analysis: Used in medical imaging tasks, such as detecting and diagnosing diseases from X-rays, MRIs, and CT scans.

• Augmented reality (AR): Enhances the capabilities of AR applications by enabling real-time recognition and tracking of objects.

What is the role of Deep Learning in machine vision?

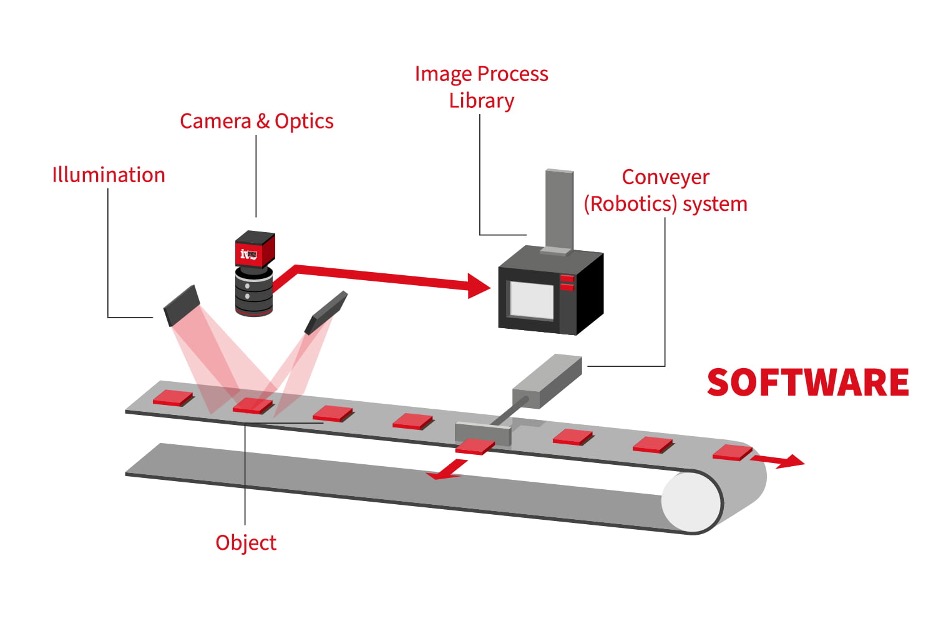

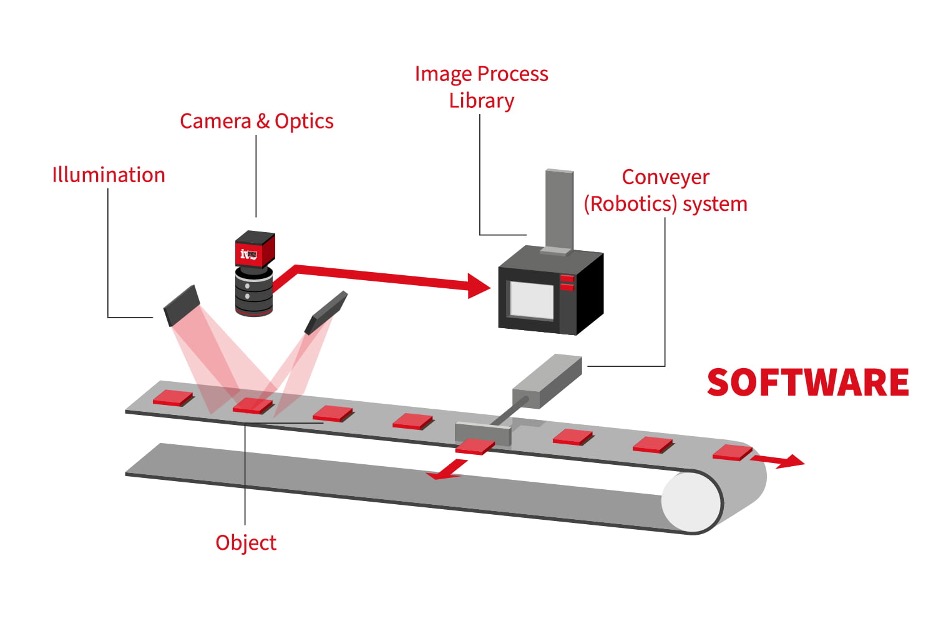

7 elements of a machine vision system

DL plays a crucial role in machine vision by providing advanced techniques for processing and understanding visual information. Key roles in machine vision include:

• Feature learning: DLs excels at automatically learning hierarchical features from raw visual data, essential in machine vision applications where identifying relevant patterns and features in images is crucial for decision-making.

• Object recognition and classification: DL enables accurate and efficient object recognition and classification. Machine vision systems can use deep neural networks to categorize objects in images, valuable in quality control in manufacturing.

• Object detection: DL is used for object detection tasks in machine vision. It can identify and locate multiple objects within an image, important in robotics and autonomous vehicles.

• Image segmentation: DL techniques are used for image segmentation in machine vision. This involves dividing an image into meaningful segments, useful in medical image analyzing and scene understanding.

• Anomaly detection: DL models can recognize normal patterns and detect anomalies in visual data. Quality control, surveillance, and monitoring systems apply it to identify deviations.

• 3D vision: DL supports 3D vision tasks by processing multiple images or using depth-sensing technologies. Vital in applications like robotic navigation.

• Document and text recognition: DL models are used for optical character recognition (OCR) and document analysis. Aids in automatically extracting information from textual content in images.

• Biometric recognition: DL enhances biometric recognition systems by providing accurate algorithms for face recognition, fingerprint recognition, and other biometric modalities.

How can machine learning benefit image recognition?

Machine learning brings efficiency, accuracy, and adaptability to image recognition tasks, making it a powerful tool for a wide range of applications, such as:

• Automated feature extraction: Machine learning, especially DL, automates the feature extraction process by learning relevant features directly from the data.

• Improved accuracy: Machine learning algorithms perform exceptionally well in image recognition tasks. They can learn hierarchical features, allowing them to recognize patterns and objects in images with great accuracy.

• Adaptability to varied data: Machine learning models generalize well to new and diverse datasets. This adaptability is crucial in image recognition situations where appearances may vary as a result of lighting conditions, angles, and background variations.

• Object detection and localization: Machine learning algorithms enable the classification of objects and the localization of their positions within an image. This is essential for autonomous vehicles, robotics, and surveillance.

• Semantic segmentation: Machine learning techniques can perform semantic segmentation by classifying each pixel in an image. This promotes understanding of the spatial relationships and boundaries between different objects.

Which is the top-rated software for machine vision?

Integrys considers Aurora Design Assistant (Aurora DA) the best software for digital image processing on the market today for the following reasons:

Flowchart-based development: Aurora DA helps you create apps quickly without coding by using flowchart steps for building and configuring applications. Aurora DA offers no-code computer vision —allowing anyone to apply artificial intelligence without having to write a line of computer code. The IDE also lets users design a custom web-based operator interface.

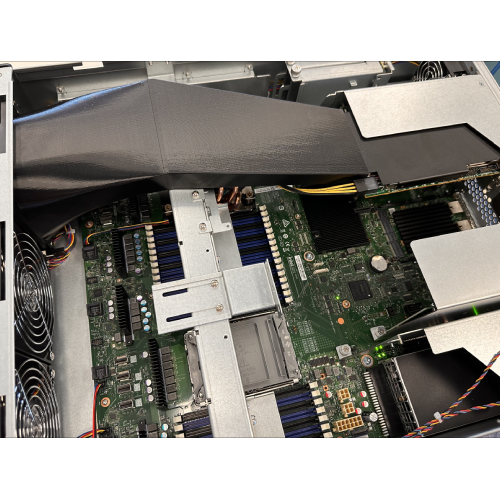

Flexible deployment options: Select your platform from a hardware-neutral environment that is compatible with both branded and third-party smart cameras, vision controllers, and PCs. It supports CoaXPress, GigE Vision, or USB3 Vision camera interfaces.

Streamlined communication: Easily share actions and results with other machines using I/Os and various communication protocols in real time.

Increased productivity and reduced development costs: Vision Academy offers online and on-site training for users to enhance their software skills on specific topics.

Contact Us:

To learn more about Integrys computer vision projects, and products such as Aurora Design Assistant software, or to request a quote, click here to contact us.